The differences between CPUs and GPUs

Despite widespread confusion about the differences between CPUs and GPUs, these two processor units perform very different tasks

It’s been 40 years since Intel released their 4004 commercial microprocessor, inadvertently kickstarting the computing revolution.

Back then, many computers were the size of family cars, and the idea of home computing still seemed fanciful.

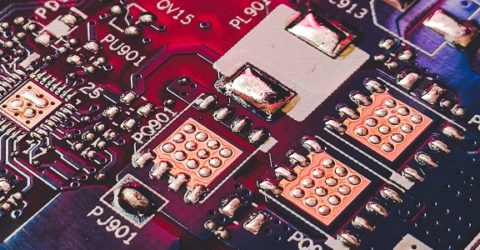

Yet the 4004 chip demonstrated how a central processor unit (or CPU) could condense thousands of transistors onto something that resembled a small copper centipede.

For decades, CPUs acted as a central nervous systems inside electronic devices. They processed instructions, controlled peripherals and distributed data.

Indeed, it’s commonly assumed by people with limited computing knowledge that a CPU is the only ‘intelligent’ part of a computer or electronic device.

In making this assumption, they’re overlooking the CPU’s close cousin – the graphics processor unit, or GPU. Gamers make this error at their peril.

Dustin down an analogy

Fans of 1980s films might like to view the differences between CPUs and GPUs as the differences between the two principal characters in Rain Man.

A CPU is a good all-rounder – unexceptional but highly competent at multitasking across different areas.

A GPU is extremely limited in most regards, but it has astonishing abilities in specific areas – most notably the rendering and displaying of computer graphics.

The physical design of a GPU is very different to its sibling.

A CPU contains between one and four cores (hence terms like quad-core being used in marketing literature), each of which is capable of conducting one task at a time.

Conversely, a GPU is packed with thousands of cores. Their sheer volume enables huge numbers of relatively basic tasks to be carried out in parallel.

In terms of graphics, that could involve rendering backgrounds, shading skies, displaying movement and projecting depth, all simultaneously.

This parallel processing requires the cores to work harmoniously and optimise efficiency, whereas a CPU’s cores are intended to handle more complex and long-lasting sequential processing.

Graphic content

The immense power offered by GPUs was quickly identified as suitable for areas other than video acceleration and transcoding (converting video files into different formats).

Today, GPUs handle complex modelling like machine learning – ‘teaching’ a neural network how to interpret data like voice recognition software, or helping it perform tasks such as self-driving vehicles.

MoreTips to avoid buying a bad computer

They’re lashed together in giant networks to undertake the advanced mathematical calculations involved in cryptocurrency mining.

GPU computation has long been used for scientific purposes, from molecular modelling to weather forecasting and climate change analysis.

A CPU would be useless in these scenarios because it couldn’t process data quickly enough, just as a GPU couldn’t power a modern-day tablet/laptop’s peripherals and software.

Most consumer devices are underpinned by a CPU, typically manufactured by industry pioneers Intel or their arch-rivals Advanced Micro Devices (AMD).

And while understanding the differences between CPUs and GPUs isn’t vital when buying consumer goods, the distinctions are very important when investing in gaming technology…