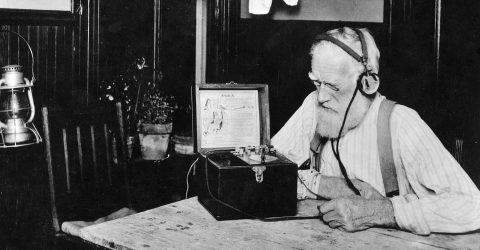

Alexa’s army of listening gnomes

A report in media outlet Bloomberg has revealed the inner workings of Alexa’s army of listening gnomes operating out of countries such as Romania. These ‘machine learning data analysts’ regularly review audio clips to improve Alexa’s responses to commands.

Amazon regularly sends fragments of recordings to the training teams to improve Alexa’s speech recognition with thousands employed in as faraway places as Boston USA, India and Romania.

Amazon has never previously acknowledged the existence of this process or the extent of human involvement, nor the company’s retention policies for these snippets of audio.

Given the question of accountability over our audio, there has been some disquiet over those doing the listening. The Financial Times (FT) found, “supervised learning requires what is known as ‘human intelligence’ to train algorithms, which very often means cheap labour in the developing world.”

The FT found one advert that showed this task would pay 25 cents to spend 12 minutes teaching an algorithm to make a green triangle navigate a maze to reach a green square. That equates to an hourly rate of just $1.25.

Alexa works by responding to ‘wake words’. But because Alexa can easily misinterpret sounds and similar-sounding words, the teams listen to snippets from people’s conversations to improve those default wake words.

Inevitably the teams are regularly subjected to embarrassing and disturbing material. Amazon said that counselling was offered but would not elaborate as to the extent of it.

Likewise, Amazon were not fully transparent when it came to their retention policies. The retention of the audio files is supposedly voluntary, but this is unclear from the information Amazon gives its users. Both Amazon and Google allow voice recordings to be deleted from your account. But it is unclear if this is permanent. Instead, it could still be passed on and used for training purposes without your knowledge.

One area which privacy campaigners have long argued for is that voice platforms like Alexa should offer an ‘auto purge’ function. This could allow users to delete recordings older than a day or say 30 days. And they could ensure that, once deleted, the file is gone forever.

So remember – be careful what you say to Alexa. Someone somewhere could be listening and trying to work out exactly what you said and more importantly what you meant.

<Image: US Dept of Agriculture